So, as everyone else is ramping up for Atlassian’s big event next week, are you stuck at home watching from afar? Don’t worry! The Jira Guy is hooking you up with four can’t-miss remote sessions you can sign up to watch for free! I’ll also be talking about some of the places you can find me if you are there next week – including a meet and greet session! #AtlassianCreator

Tag: Jira

Troubled Waters Ahead – April 2024 Security Bulletin

Atlassian released their April Security bulletin yesterday. Let’s dig into this, see what all the excitement is about, and see what people are getting wrong about it. Are you impacted by this?

Naming Conventions in Jira

Today, we look at a user-requested topic and discuss how you should name objects in Jira. Ever wonder how you can trace a screen or workflow back to a project without an audit? It’s all in how you name it! What are some of your naming rules?

Why Jira?

Why should you even pick Jira? Today I discuss why it is a better platform, as well as prepare you to deal with the naysayers. Why did you pick Jira?

It’s Snowing CVEs

Last night, Atlassian released four new security advisories covering many of their projects. What versions are impacted, and what should you do to protect yourself? We’ll cover all that and more today!

Why So Many Jiras?

Ever wonder what the difference is between Jira Software or Service Management, or why Jira Work Management exists? What even is JPD? Today we go over all of that and more! What’s your favorite flavor of Jira?

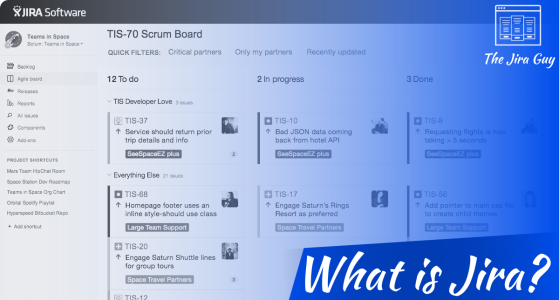

What is Jira?

Are you new to Jira, and not even sure how to pronounce it, let alone what it is? Then join in for first post in a series meant to bring you from Jira newbie to Jira Guy or Gal!

When Should You Restart Jira?

How do you decide when to do a Jira Restart? Are regular restarts even necessary? Today we look at just that and answer a reader’s question! How do you plan your Jira restarts?

The Case for Gitlab

Today I discuss why I use GitLab, and why someone would want to use both GitLab and Bitbucket Cloud. What toolchain are you using?

Atlassian Creators

Did you know about the #AtlassianCreators? This is a new pilot program from Atlassian to support the community creating content for Atlassian Admins and Users. Today I share a few Atlassian Creators you might want to know about! Who are you following?