Have you heard about the latest product to hit beta on Atlassian Cloud? Today we look at Confluence Whiteboards, and what features it currently has. Have you gotten to use this new tool?

Tag: apps

Are Free Apps going extinct?

A reader came to me concerned about the future of free Apps on the Atlassian Marketplace. So let’s take a look at what the actual data says to see how things might be changing!

What is Automation for Confluence?

Have you heard about Atlassian’s latest offering? Today we look at Automation for Confluence – which promises to do what A4J did for Jira in Confluence! How would you use this?

Myths about Moving to Atlassian Cloud: My Take

Have you ever read an Atlassian white paper and wonder how much of it was spin? I have! So I decided to read through one and post my thoughts! What are some questions you have about Jira Cloud?

View post to subscribe to site newsletter.

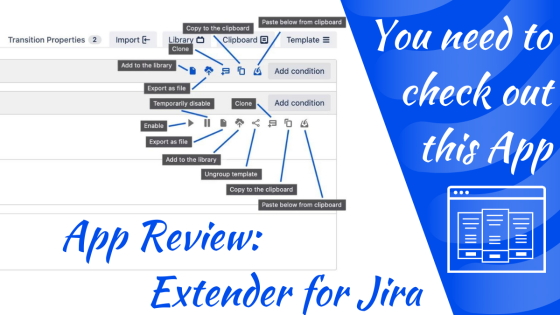

App Review: Extender for Jira

Today we check out an #Jira App recommended to me by a #JiraAdmin just like you. Do you have any #Marketplace Apps you think I should check out?

App Benchmarking!

Do you know how each App you run impacts your performance on #Jira? Today I outline a test you can run to get exactly that information! #Atlassian #Marketplace #App #Benchmarking

View post to subscribe to site newsletter.

Four Apps to add a Personal touch to Jira

Does your #Jira instance feel a bit cold and robotic? Today we review four Apps that will bring a more human feel to your Jira instance! #Atlassian

App Review: Profields by Deiser

Sometimes, you just need more details about your #Jira projects. Good thing Deiser heard us with their app Profields! #AppForEveryTeam #Atlassian

View post to subscribe to site newsletter.

App Review: Rich Filters by Qotilabs

Do you ever feel you need to drill down into your #Jira dashboard a bit more? Qotilabs has the solution to help you with just that! #AppforEveryTeam #Atlassian

View post to subscribe to site newsletter.

App Review: JIRA Workflow Toolbox by Decadis

Do you wish you had one App that let you run automation rules, calculate automated fields, and did #JIRA Workflow functions? Today we review how Decadis will grant your wish!

View post to subscribe to site newsletter.